|

|

|

|

|

|

|

|

|

|

|

|

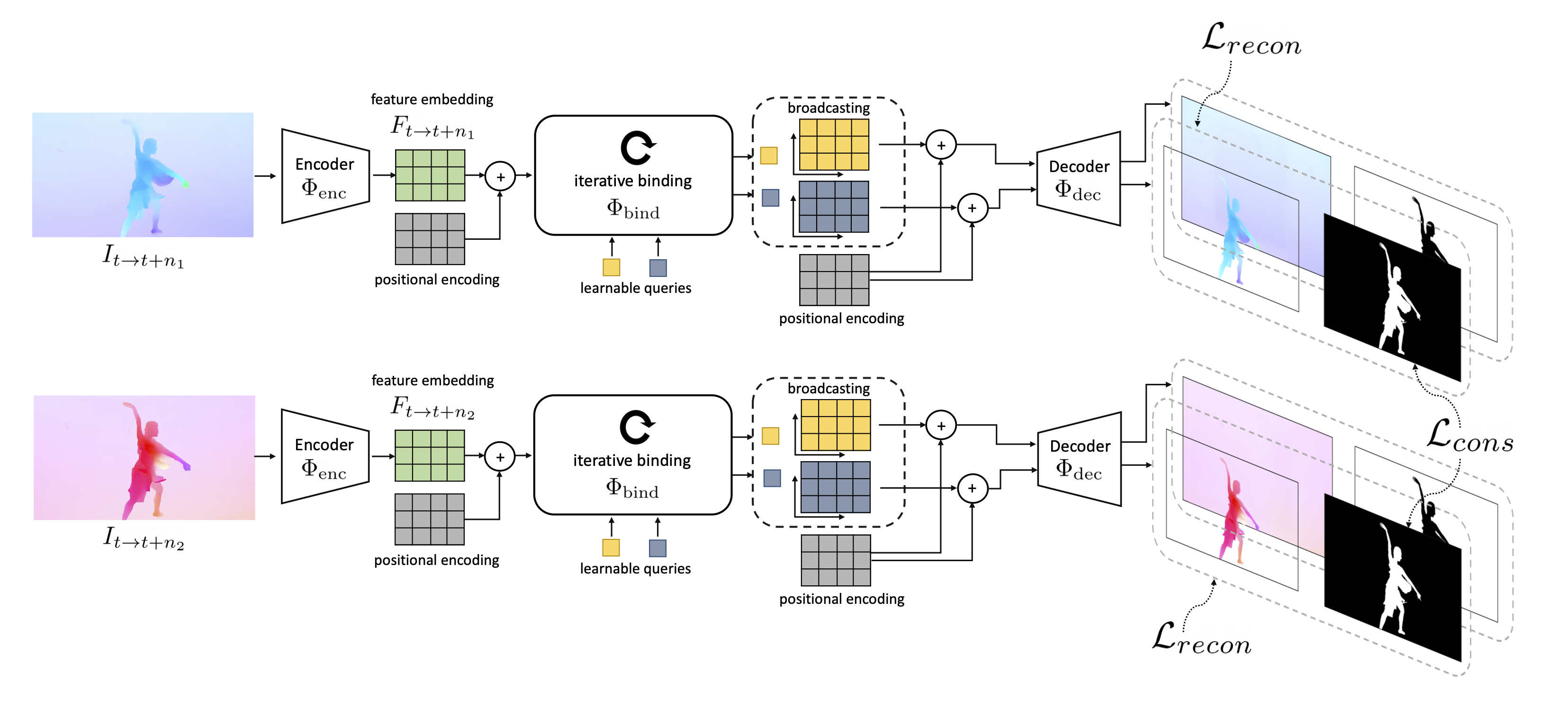

| Animals have evolved highly functional visual systems to understand motion, assisting perception even under complex environments. In this paper, we work towards developing a computer vision system able to segment objects by exploiting motion cues, i.e. motion segmentation. We make the following contributions: First, we introduce a simple variant of the Transformer to segment optical flow frames into primary objects and the background. Second, we train the architecture in a self-supervised manner, i.e. without using any manual annotations. Third, we analyze several critical components of our method and conduct thorough ablation studies to validate their necessity. Fourth, we evaluate the proposed architecture on public benchmarks (DAVIS2016, SegTrackv2, and FBMS59). Despite using only optical flow as input, our approach achieves superior results compared to previous state-of-the-art self-supervised methods, while being an order of magnitude faster. We additionally evaluate on a challenging camouflage dataset (MoCA), significantly outperforming the other self-supervised approaches, and comparing favourably to the top supervised approach, highlighting the importance of motion cues, and the potential bias towards visual appearance in existing video segmentation models. |

|

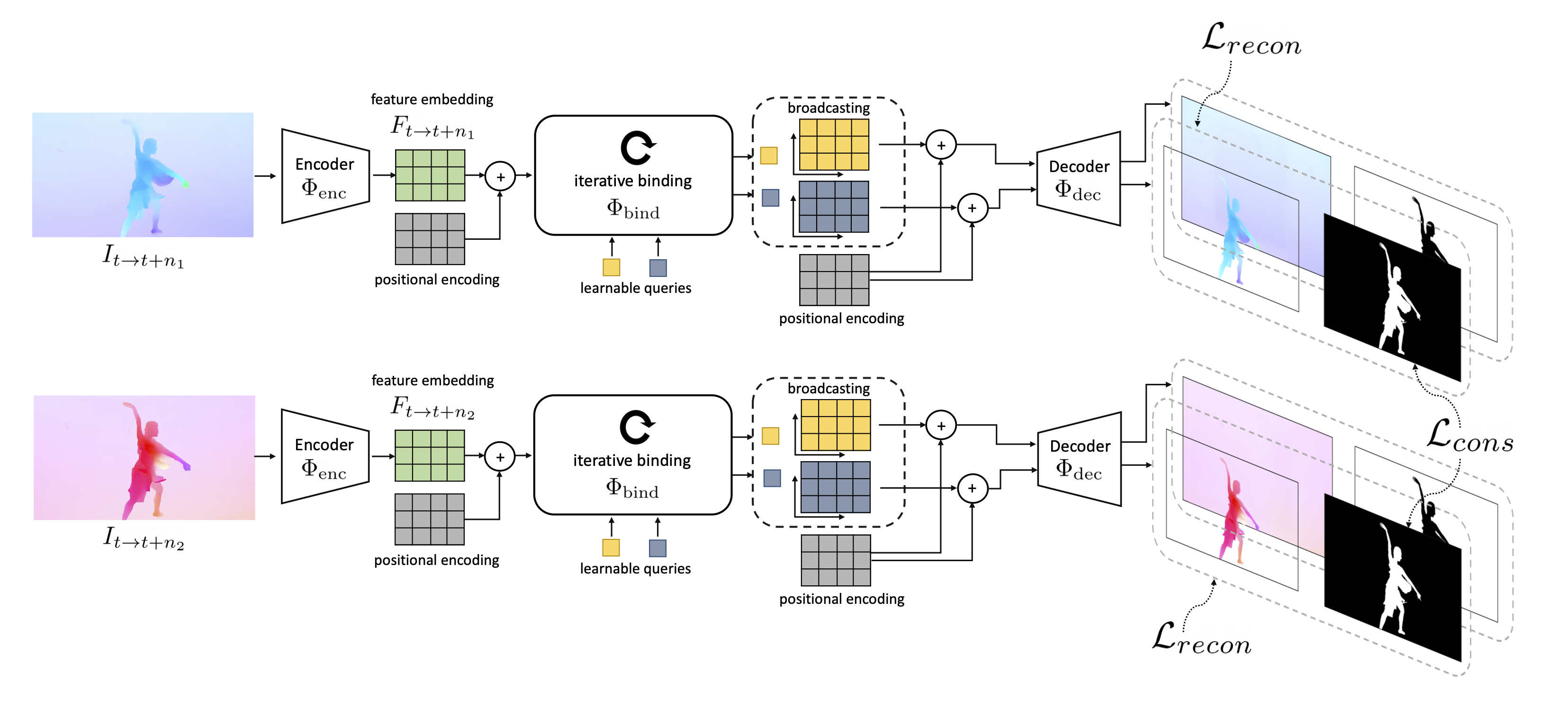

| Results comparison on DAVIS2016. Note that, supervised approaches may use models pretrained on ImageNet, but here we only count number of images with pixel-wise annotations. |

|

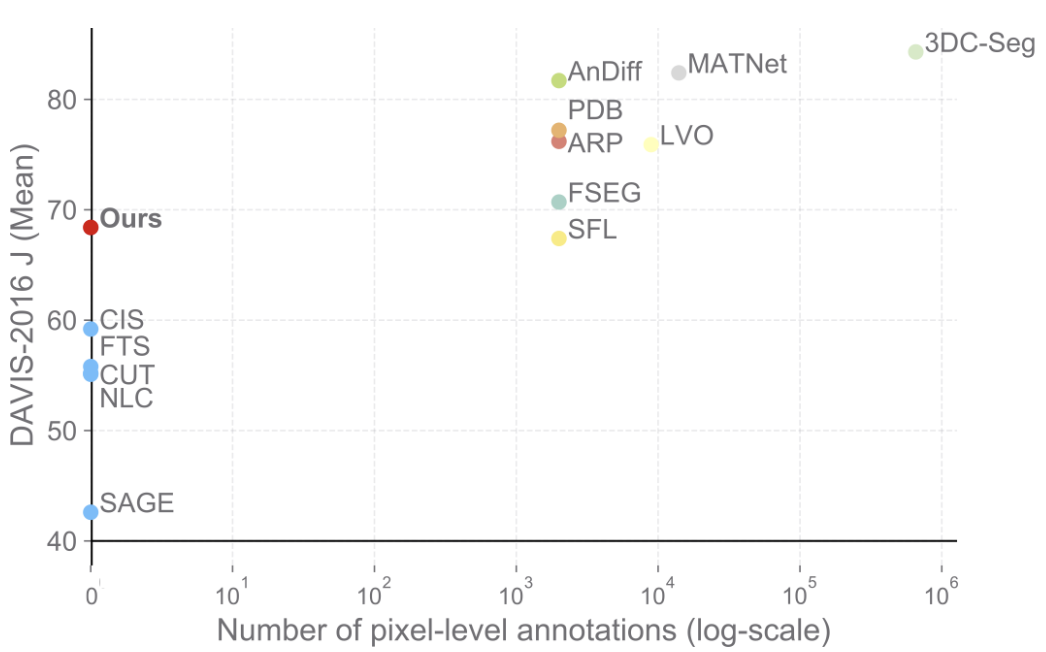

| Full comparison on unsupervised video segmentation. We consider three popular datasets, DAVIS2016, SegTrack-v2 (STv2), and FBMS59. Models above the horizontal dividing line are trained without using any manual annotation, while models below are pre-trained on image or video segmentation datasets, e.g. DAVIS, YouTube-VOS, thus requiring ground truth annotations at training time. Numbers in parentheses denote the additional usage of significant post-processing, e.g. multi-step flow, multi-crop, temporal smoothing, CRFs. |

|

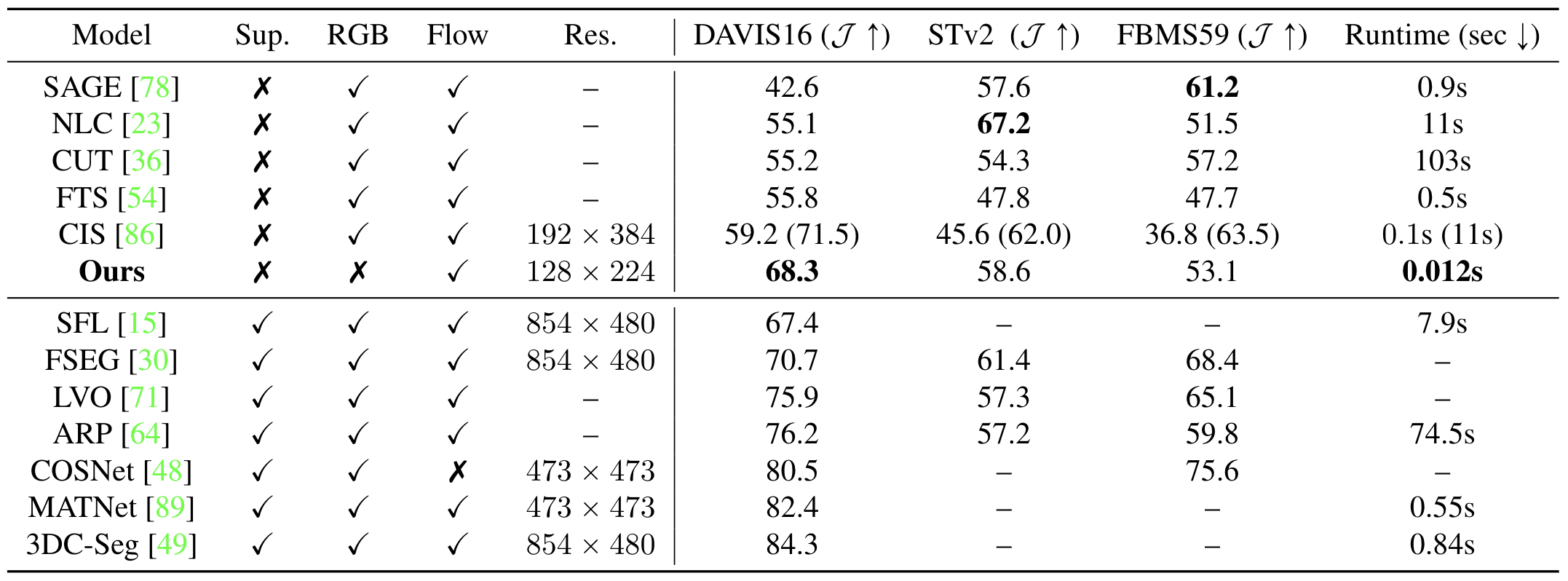

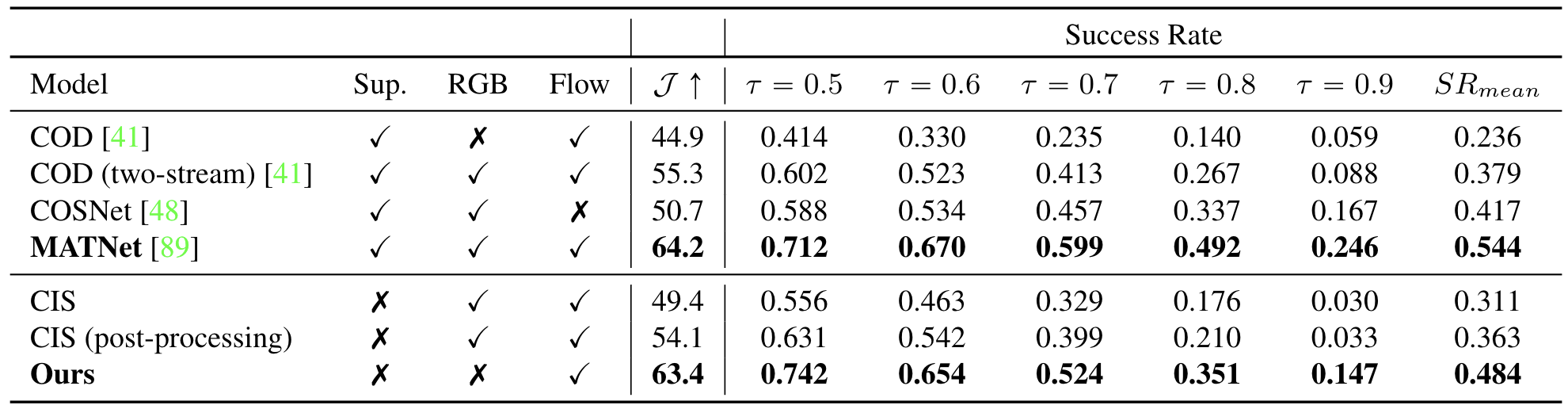

| Comparison results on MoCA dataset. We report the successful localization rate for various thresholds. Both CIS and Ours were pre-trained on DAVIS and finetuned on MoCA in a self-supervised manner. Note that, our method achieves comparable Jaccard to MATNet (2nd best model on DAVIS), without using RGB inputs and without any manual annotation for training. |

|

|

|

|

|

|

|

|

|

|

|

C. Yang, H. Lamdouar, E. Lu, A. Zisserman, W. Xie Self-supervised Video Object Segmentation by Motion Grouping International Conference on Computer Vision (ICCV), 2021 PDF | ArXiv | Code | Bibtex |

AcknowledgementsThis template was originally made by Phillip Isola and Richard Zhang for a colorful ECCV project; the code can be found here. |